From Audio To Mind-Map

Idea 💡

Idea was to develop an application that allow from an Audio concerning a discussion, a meeting, etc … to generate a “meaningful mind-map diagram”, that represent the touched key points. This representation joined with summary provide a more complete and understandable informations

Architecture of solution

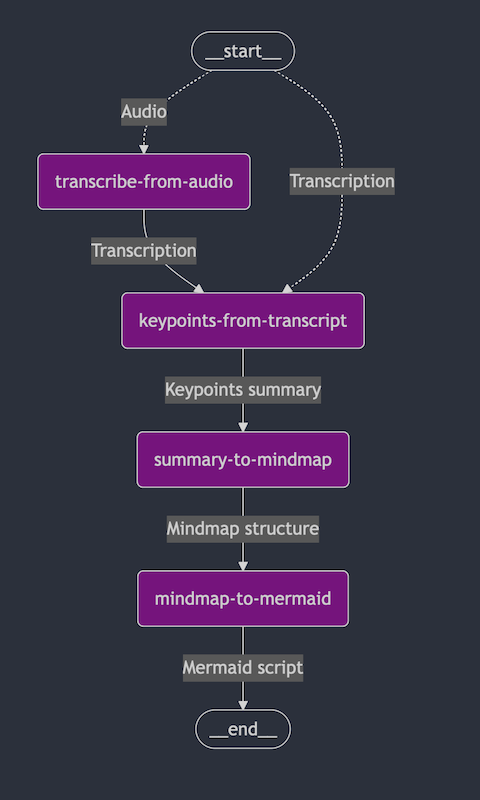

The architecture of the solution is shown below:

The agentic architecture is composed by a series of agents, each one responsible for a specific task. The sequence of agents is:

-

transcribe-from-audio: This agent is responsible to transcribe the provided audio.

-

keypoints-from-transcript: This Agent is responsible to extract the Keypoints inside the given transcription

-

summary-to-mindmap: This agent is responsible to arrange the key points in a kind of ontology providing a hierarchical representation of information

-

mindmap-to-mermaid: This agent is responsible to transform the mind-map representation in a mermaid syntax ready for the visualization

Notes: 👈

As shown in the diagram, the architecture allows you to bypass the transcription stage if you already have a transcription.

Demo

I’ve developed a demo app for the AssemblyAI Challenge as a Sophisticated Speech-to-Text use case.

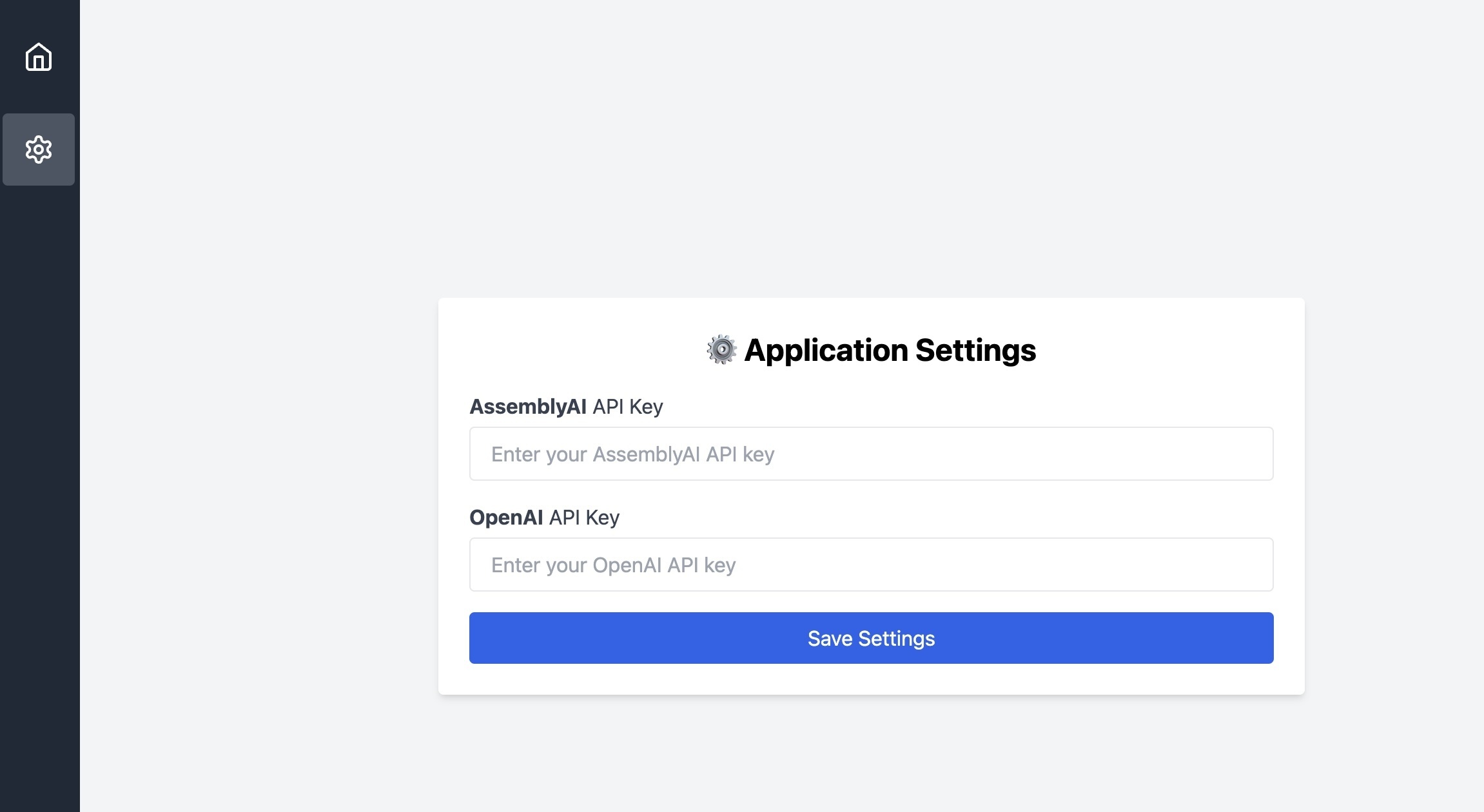

The application is available here, for access to voice functionality you need an AssemblyAI Api Key while for ather agents you need an OpenAI Api Key.

Below there are some representative screenshots

Settings

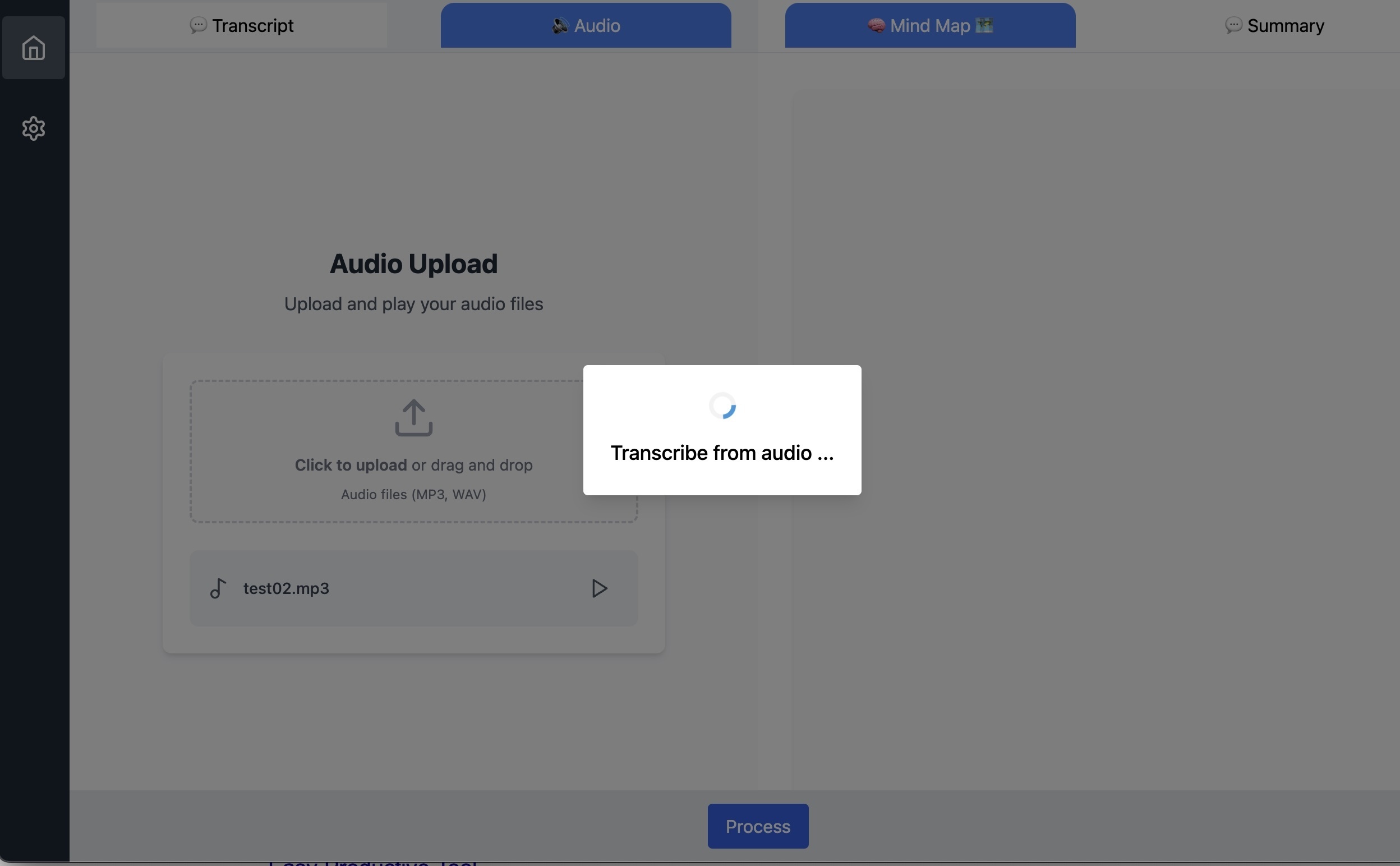

Upload Audio

Transcribe Audio

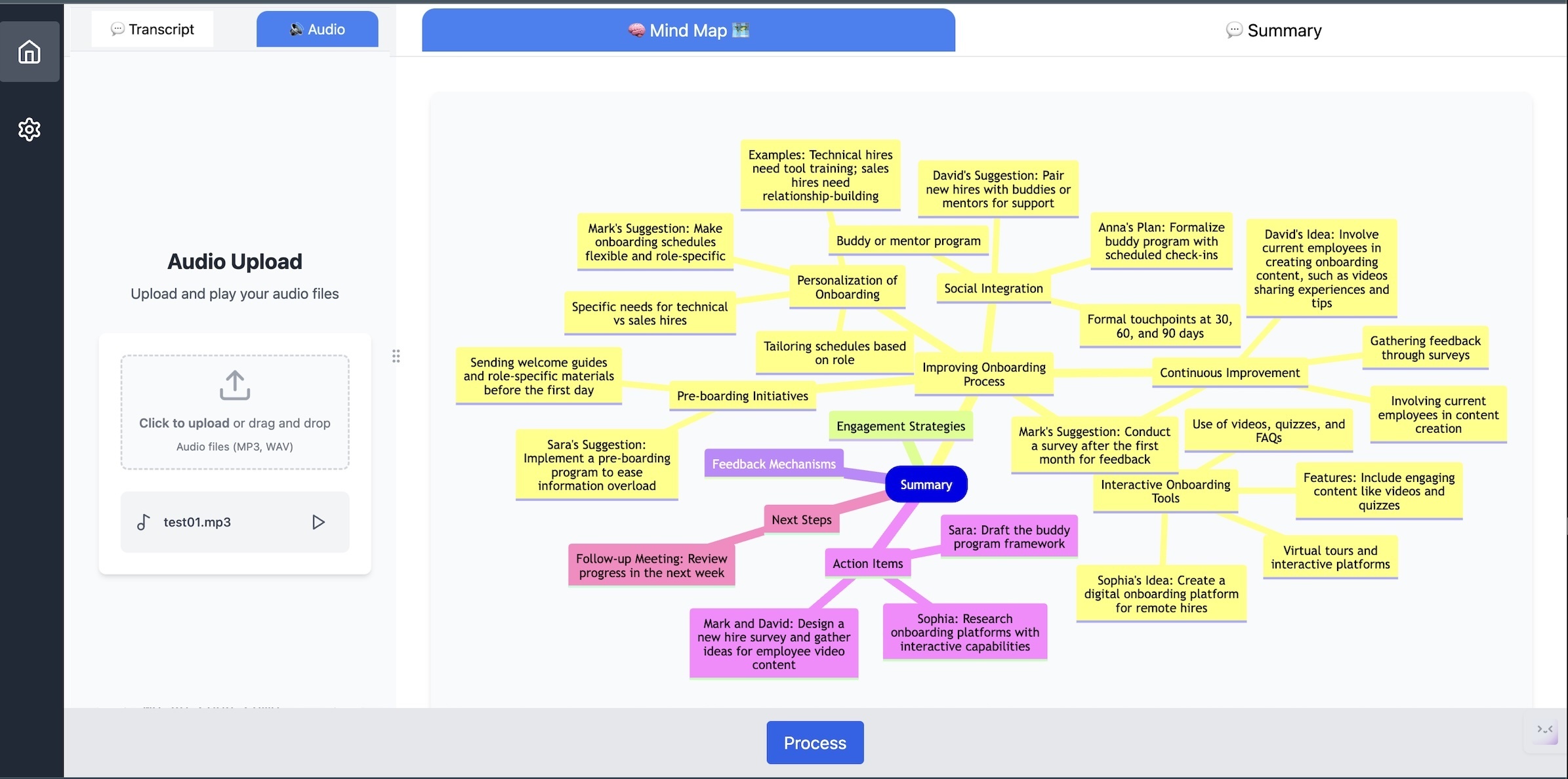

Generate Mindmap Diagram

Conclusion

I think that this idea could be further extended enabling different kind of transcript analisys like for example Problem-Solution Mapping, Questions and Answers Mapping, Topics and Subtopics Mapping and others. The possibilities are truly endless and the only limit is in imagination 💭🤔💡.

I hope that this idea will be helpful in some way in creating value around, in the meanwhile, enjoy AI coding! 👋